Social effectiveness seems like a skill with incredibly high returns on investment. I recognize that many of my problems achieving my goals stem from fear of social situations: I'm afraid to talk to people and afraid to ask them for things. Partially I worry about making a bad impression, but mostly I think I just have some intrinsic fear of people.

Social effectiveness is not just about talking to people: being reliably able to convince people of things during conversations, such as your level of intelligence, attractiveness, social effectiveness. If you can effectively charm rich people, you can probably get much farther in achieving your goals; if you can effectively charm attractive people you can have a more successful sex life.

We're trying lots of ways to improve social effectiveness -- fashion being the one which I will focus on today. The way you dress provides lots of bits of data about your personality, and so you should carefully select your clothing to provide the bits you want to provide. Seeming more attractive is always better (not just in dating, but in business too!) Fashion can also make you seem conscientious (if you were put together carefully) or higher status.

We had our second fashion field trip this weekend, and so it seemed like time to write a post.

A few weeks ago we had sessions on what makes good fashion. Wendy already wrote about the content, so I won't go too much into depth there.

Luke and Hugh are the fashion instructors. To give you a sense of their fashion style, Luke usually wears designer jeans, a designer T-shirt or monocolored button-down shirt, belt, and shiny shoes. He also spikes his hair. Luke's look is designed to be imposing and impressive -- he's six foot four, and his fashion seems designed to accentuate this. It is considered fairly mainstream, though the spiked hair is nontraditional; he also sometimes wears a shiny belt buckle and leather wristband, which push him a bit towards the "rocker" category.

Hugh doesn't have nearly as consistent of a style. The first day he showed up, he was wearing basically all white -- white button-down shirt, white khaki pants, white belt, with black military boots. His hair is long and dyed black with red highlights, and he wears it either draped to his shoulders or tied into a ponytail. Besides the all-white outfit, he's also worn an all-black suit (black jacket, trousers, shirt and tie), and other interesting outfits that I don't remember very well. He is considered "very goth".

The rest of this post is me describing things I've bought. I am not really willing to put lots of silly photos of myself online, so you'll have to settle for descriptions. Do Google Image Search if you're unsure of a term.

On the first trip, we went to Haight St. in San Francisco, which is quite famous for being a fashionable place to shop. There are a lot of interesting clothes shops there. Much of what you can find on Haight is alternative fashion rather than mainstream fashion, but there's stuff for everyone. There's a steampunk store (Distractions), a military surplus store (Cal Surplus), indie (Ceiba), goth (New York Apparel); there are several thrift stores, shoe shops, and random other fashion stores that I didn't go into.

I went to Distractions first and tried on a full outfit there -- a stretchy black pinstripe shirt with leather accents, black pinstripe pants, black top hat, studded belt and leather wristband. It was pretty awesome, but I would never wear it. Someone said it could be a good clubbing outfit, but I don't really go clubbing.

However, I liked the pinstripe pants and belt enough to buy them. My belt is actually super awesome; it's made of four separate leather components connected by rings. I've gotten lots of compliments on it. The pants are fairly muted by themselves, and go quite well with colorful shirts and shoes. They are a little too warm to wear in the summer though.

The military surplus store was another place where I got some good stuff. I tried on military boots and work shirts. The military boots are basically thick black boots with lots of lacing, and sometimes a zipper down the side. I liked the style, but didn't find boots that fit. I did find a boring black work shirt. Some people got these awesome black commando turtleneck sweaters -- acrylic sweaters with interesting features like epaulets on the shoulders.

I picked up some tight-fitting shirts (Henley and v-neck tees) at American Apparel and thrift stores; I wanted casual shirts that look good, and I found some at these places.

Separately from the fashion trips, I picked up some Converse high-top fashion sneakers, which have served me rather well. They're cranberry colored and they go super well with most of my outfits. I think that was one of the better fashion choices I've made.

Anyway, last weekend was another fashion trip, this time to the Union Square Mall in downtown San Francisco. This was a much more mainstream-oriented trip; we went to big chain stores like Express, American Eagle, and Guess. Express seems to be a great place for mainstream fashion; I liked a broad variety of their styles, and it was stuff that fit me. I ended up getting a cranberry colored button-down shirt to go with my Converse. I also got a new pair of dark jeans -- most of my jeans were medium dark blue, but these are darker, and more on the gray side.

At Guess, Luke handed me a shirt and told me to try it on. I did, and it turned out to be a really awesome shirt: it was a medium light blue button-down shirt which fit me very well, with epaulets on the shoulders. I wasn't planning to buy anything else but this shirt fit me well enough, and I really liked the style, so I bought it.

I tried a shirt at Hugo Boss. The style and fit were fabulous, but it was a $175 shirt, so I didn't buy that one. Someday, maybe.

Sunday, 31 July 2011

Saturday, 23 July 2011

Anticipations and Bayes

Crap. Missed my deadline again on weekly updates. Apologies.

I'll review more of what we've been doing in sessions over the last week or two. It's been some more epistemic rationality tricks -- that is, tricks for knowing the right answer. (This is as opposed to "instrumental rationality" or tricks for achieving what you want.)

Accessing your anticipations: Sometimes our professed beliefs differ from our anticipations. For instance, some stuff (barbells and a bench) disappeared from our back yard last week. I professed a belief that they weren't stolen. I wanted to believe they weren't stolen. I said I thought it was 40% likely they weren't stolen. But when someone offered me a bet at even odds as to whether some other cause of their disappearance would arise in a week, my brain rejected the bet: Emotionally, it seemed that I would probably lose that bet. (Consistency effects made me accept the bet anyway, so now I'm probably out 5 dollars.)

When your professed beliefs differ from your anticipations, you want to access your anticipations, because that is what is controlling your actions. We have learned some tricks for accessing your anticipations -- imagining (or being actually offered) a bet, which side would you prefer to be on? Another trick is imagining a sealed envelope with the answer, or your friend about to type the question into Google. Do your anticipations change what answer you expect to see? One more trick is visualizing a concrete experiment to test this belief. When the experiment comes up with a result, are you surprised whether it goes the way you "believe" it goes, or are you surprised when it doesn't?

The naïve belief-testing procedure: when you're wondering about something, ask yourself the following questions: "If X were true, what would I see? If X weren't true, what would I see?"

I'll use the weights-stolen example again. If the weights were stolen, I would expect to see the weights missing (yes); that *all* the weights were stolen (in fact, they left the kettlebell and a few of the weights); that the gate had been left open the previous night (undetermined); that other things were stolen too (also not the case). If the weights weren't stolen, I would expect them to come back, or to eventually hear why they were taken (I didn't hear anything); to learn that the gate had been locked (I didn't learn this).

This can help you analyze the evidence for a belief. It highlights the evidence in favor of the belief, and does an especially good job of making you realize when certain bits of evidence aren't very strong, because they support both sides of the story. (Example: when testing the belief "my friend liked the birthday present I gave him," you might consider the evidence "he told me he liked it" to be weak, because you would expect your friend to tell you he liked it whether or not he actually liked it.)

It is called "naïve" for a reason, though: it doesn't make you think about the prior probability of the belief being true. Consider the belief "the moon is made of Swiss cheese." I would expect to observe the moon's surface as bumpy and full of holes if it were made of Swiss cheese, and I would be less likely to observe that if it weren't. I do observe it, so it is evidence in favor of that belief, but the prior probability of the moon being made of cheese is quite low, so it still doesn't make me believe it.

In order to better understand the effects of priors and bits of evidence, we've been studying the Bayesian model of belief propagation. You should read An Intuitive Explanation of Bayes' Theorem by Eliezer, if you're interested in this stuff. I'll just point out a few things here.

First, we model pieces of evidence (observables) as pertaining to beliefs (hidden variables). When determining your level of belief in an idea, you start with a prior probability of the belief being true. There are many ways to come up with a prior, but I use a fairly intuitive process which corresponds to how often I've observed the belief in the past. I could use my own experience as the prior -- "When people give me a small gift, I like it only about 30% of the time." So for someone else liking my small gift, I could start with the prior probability of 30%.

If I don't have past experience which would lead me to a prior, I could start with some sense of the complexity of the belief. I haven't been to the moon. "The moon is made of cheese" is a very complex belief, because it requires me to explain how the cheese got there. "The moon is made of rock" is much simpler, because I know at least one other planet which is made of rock (Earth). I might still have to explain how the rock got there, but at least there's another example of a similar phenomenon.

Once you know your priors, you consider the evidence. You can formulate evidence in terms of likelihood ratios, or in terms of probabilities of observing the evidence given the belief. In either case, you can mathematically transform one to the other, and then mathematically compute the new degree of belief (the new probability) given the prior and the likelihood ratio. (The math is simple: if your prior is 1:3 (25%) and your evidence was 10 times more likely to be produced if the belief is true than if the belief were false -- 10:1 -- then you multiply the odds (1:3 * 10:1 = 10:3) and end up with a posterior odds ratio of 10:3, or 10/13, or about 77%. (If this didn't make sense, go read Eliezer's intuitive explanation, linked above.)

We practiced this procedure of doing Bayesian updates on evidence. We explored the ramifications of Bayesian evidence propagation, such as the (somewhat) odd effect of "screening off": if you know your grass could get wet from a sprinkler or the rain, and you observe the grass is wet, you assign some level of belief to the propositions "it rained recently" or "the sprinkler was running recently". If you later heard someone complaining about the rain, since that was sufficient to cause the wet grass, you should downgrade the probability that the sprinkler was also running, simply because most of the situations which would cause wet grass do not contain both rain and sprinklers.

The idea of screening off seems rather useful. Another example: if you think that either "being really smart" or "having lots of political skill" is sufficient to become a member of the faculty at Brown University, then when you observe a faculty member, you guess they're probably quite smart, but if you later observe that they have lots of political skill, then you downgrade their probability of being smart.

I'll review more of what we've been doing in sessions over the last week or two. It's been some more epistemic rationality tricks -- that is, tricks for knowing the right answer. (This is as opposed to "instrumental rationality" or tricks for achieving what you want.)

Accessing your anticipations: Sometimes our professed beliefs differ from our anticipations. For instance, some stuff (barbells and a bench) disappeared from our back yard last week. I professed a belief that they weren't stolen. I wanted to believe they weren't stolen. I said I thought it was 40% likely they weren't stolen. But when someone offered me a bet at even odds as to whether some other cause of their disappearance would arise in a week, my brain rejected the bet: Emotionally, it seemed that I would probably lose that bet. (Consistency effects made me accept the bet anyway, so now I'm probably out 5 dollars.)

When your professed beliefs differ from your anticipations, you want to access your anticipations, because that is what is controlling your actions. We have learned some tricks for accessing your anticipations -- imagining (or being actually offered) a bet, which side would you prefer to be on? Another trick is imagining a sealed envelope with the answer, or your friend about to type the question into Google. Do your anticipations change what answer you expect to see? One more trick is visualizing a concrete experiment to test this belief. When the experiment comes up with a result, are you surprised whether it goes the way you "believe" it goes, or are you surprised when it doesn't?

The naïve belief-testing procedure: when you're wondering about something, ask yourself the following questions: "If X were true, what would I see? If X weren't true, what would I see?"

I'll use the weights-stolen example again. If the weights were stolen, I would expect to see the weights missing (yes); that *all* the weights were stolen (in fact, they left the kettlebell and a few of the weights); that the gate had been left open the previous night (undetermined); that other things were stolen too (also not the case). If the weights weren't stolen, I would expect them to come back, or to eventually hear why they were taken (I didn't hear anything); to learn that the gate had been locked (I didn't learn this).

This can help you analyze the evidence for a belief. It highlights the evidence in favor of the belief, and does an especially good job of making you realize when certain bits of evidence aren't very strong, because they support both sides of the story. (Example: when testing the belief "my friend liked the birthday present I gave him," you might consider the evidence "he told me he liked it" to be weak, because you would expect your friend to tell you he liked it whether or not he actually liked it.)

It is called "naïve" for a reason, though: it doesn't make you think about the prior probability of the belief being true. Consider the belief "the moon is made of Swiss cheese." I would expect to observe the moon's surface as bumpy and full of holes if it were made of Swiss cheese, and I would be less likely to observe that if it weren't. I do observe it, so it is evidence in favor of that belief, but the prior probability of the moon being made of cheese is quite low, so it still doesn't make me believe it.

In order to better understand the effects of priors and bits of evidence, we've been studying the Bayesian model of belief propagation. You should read An Intuitive Explanation of Bayes' Theorem by Eliezer, if you're interested in this stuff. I'll just point out a few things here.

First, we model pieces of evidence (observables) as pertaining to beliefs (hidden variables). When determining your level of belief in an idea, you start with a prior probability of the belief being true. There are many ways to come up with a prior, but I use a fairly intuitive process which corresponds to how often I've observed the belief in the past. I could use my own experience as the prior -- "When people give me a small gift, I like it only about 30% of the time." So for someone else liking my small gift, I could start with the prior probability of 30%.

If I don't have past experience which would lead me to a prior, I could start with some sense of the complexity of the belief. I haven't been to the moon. "The moon is made of cheese" is a very complex belief, because it requires me to explain how the cheese got there. "The moon is made of rock" is much simpler, because I know at least one other planet which is made of rock (Earth). I might still have to explain how the rock got there, but at least there's another example of a similar phenomenon.

Once you know your priors, you consider the evidence. You can formulate evidence in terms of likelihood ratios, or in terms of probabilities of observing the evidence given the belief. In either case, you can mathematically transform one to the other, and then mathematically compute the new degree of belief (the new probability) given the prior and the likelihood ratio. (The math is simple: if your prior is 1:3 (25%) and your evidence was 10 times more likely to be produced if the belief is true than if the belief were false -- 10:1 -- then you multiply the odds (1:3 * 10:1 = 10:3) and end up with a posterior odds ratio of 10:3, or 10/13, or about 77%. (If this didn't make sense, go read Eliezer's intuitive explanation, linked above.)

We practiced this procedure of doing Bayesian updates on evidence. We explored the ramifications of Bayesian evidence propagation, such as the (somewhat) odd effect of "screening off": if you know your grass could get wet from a sprinkler or the rain, and you observe the grass is wet, you assign some level of belief to the propositions "it rained recently" or "the sprinkler was running recently". If you later heard someone complaining about the rain, since that was sufficient to cause the wet grass, you should downgrade the probability that the sprinkler was also running, simply because most of the situations which would cause wet grass do not contain both rain and sprinklers.

The idea of screening off seems rather useful. Another example: if you think that either "being really smart" or "having lots of political skill" is sufficient to become a member of the faculty at Brown University, then when you observe a faculty member, you guess they're probably quite smart, but if you later observe that they have lots of political skill, then you downgrade their probability of being smart.

Thursday, 14 July 2011

Basic Rationality Training

I guess it's well past time to report on Anna's sessions. I would summarize them as "basic rationality training," and they encompass a wide variety of skills, which together tend to produce more accurate thought and more productive conversation.

Asking for examples was the first one. Humans seem to think much more accurately with examples than with abstract ideas. Example: your friend goes around saying "Harry Potter is stupid". You could interpret this in many ways: the book itself is stupid, or he doesn't like the book, or the character is stupid, or the character does stupid actions sometimes, etc. What a lot of people will do here is argue with whatever interpretation jumps to their mind first. Instead, what you should do is ask for an example. Maybe your friend will say "well, the other day I was walking down the hallway and someone jumped out from a door and shouted 'avada kedavra' at me, and I was like 'really?'." Now you probably understand what interpretation he means, and you won't go arguing about all the smart things that Harry does in the books (or in the Methods of Rationality).

Noticing rationalization and asking for true causes of your beliefs: I've talked about these, in "How to Enjoy Being Wrong." Briefly, when someone says something which you disagree with, ask yourself why you disagree -- what experience you had which led to your belief.

Fungibility: when you notice you're doing an action -- perhaps someone asks you "why do you do X?", ask yourself what goals the action is aiming for, then notice if there are other ways of achieving those goals. If you feel resistance to a new strategy, it is likely that you actually did the action for some other reason -- go back and try to optimize that goal separately.

Example: I read science fiction novels. What goals does this serve? Imagining the future is attractive; spending time with a book in my hands is pleasant; I want to follow the plot.

To achieve the "imagining the future" and "follow the plot" goals, I could go read spoilers of the novel on the Internet. But I feel resistance to that strategy. Hmm. I might miss something? That's probably not it... I guess I just get some sort of ego-status by knowing that I read the whole book without skipping anything. And this makes me realize that I read books because I want to seem well-read.

Anyway, if you do this procedure on a regular basis, sometimes you'll notice actions which don't make much sense given the goals you're optimizing for. When you do, you can make a change and optimize your life.

Value of Information and Fermi Calculations: Do math fast and loose to determine whether you're wasting your time. One of the most useful pieces of math is how much a piece of information is worth. For instance, if I'm trying to start a company selling pharmaceuticals on the internet, I want to know what the regulations will be like, and I don't see an easy way to estimate this just from what I know. I would have to do research to figure this out. But I can estimate the size of the market -- maybe 100M people in the US who take drugs regularly, and lots of drugs cost well over $1 a day, so $100M/day, or $50bn/year. My business sense tells me that regulations are likely to be the main barrier to entry for competitors (there's so much incentive for the existing players to put up barriers that they've probably done it).

Let's do out the probabilities:

So the expected value of getting this information appears to be at least $100,000, if it can actually establish that 10% probability. I can probably obtain this information for a lot cheaper than that, so I should go look up regulatory burdens of starting an online pharmaceutical company.

Obviously this analysis has a lot of flaws, but it still seems very useful.

Sunk cost fallacy and self-consistency: Noticing when you are making decisions based on sunk costs or a previous decision you want to be consistent with. Poker example: I was bluffing on a hand, someone raised me, I was tempted to reraise in order to be consistent with that bluff. Non-poker example: Driving down the street to the grocery store, I realize I want to go to the bank first, because I will have ice cream in the trunk on the way home. Even though it would be faster to go to the bank first, and I don't risk my ice cream melting, I am already going in the direction of the grocery store, and I don't want to turn around now.

That's most of what we've covered. It's really useful and applicable material. Even if you understand the theory based on reading, it seems like I've learned the material better by being forced to come up with examples on the fly.

Asking for examples was the first one. Humans seem to think much more accurately with examples than with abstract ideas. Example: your friend goes around saying "Harry Potter is stupid". You could interpret this in many ways: the book itself is stupid, or he doesn't like the book, or the character is stupid, or the character does stupid actions sometimes, etc. What a lot of people will do here is argue with whatever interpretation jumps to their mind first. Instead, what you should do is ask for an example. Maybe your friend will say "well, the other day I was walking down the hallway and someone jumped out from a door and shouted 'avada kedavra' at me, and I was like 'really?'." Now you probably understand what interpretation he means, and you won't go arguing about all the smart things that Harry does in the books (or in the Methods of Rationality).

Noticing rationalization and asking for true causes of your beliefs: I've talked about these, in "How to Enjoy Being Wrong." Briefly, when someone says something which you disagree with, ask yourself why you disagree -- what experience you had which led to your belief.

Fungibility: when you notice you're doing an action -- perhaps someone asks you "why do you do X?", ask yourself what goals the action is aiming for, then notice if there are other ways of achieving those goals. If you feel resistance to a new strategy, it is likely that you actually did the action for some other reason -- go back and try to optimize that goal separately.

Example: I read science fiction novels. What goals does this serve? Imagining the future is attractive; spending time with a book in my hands is pleasant; I want to follow the plot.

To achieve the "imagining the future" and "follow the plot" goals, I could go read spoilers of the novel on the Internet. But I feel resistance to that strategy. Hmm. I might miss something? That's probably not it... I guess I just get some sort of ego-status by knowing that I read the whole book without skipping anything. And this makes me realize that I read books because I want to seem well-read.

Anyway, if you do this procedure on a regular basis, sometimes you'll notice actions which don't make much sense given the goals you're optimizing for. When you do, you can make a change and optimize your life.

Value of Information and Fermi Calculations: Do math fast and loose to determine whether you're wasting your time. One of the most useful pieces of math is how much a piece of information is worth. For instance, if I'm trying to start a company selling pharmaceuticals on the internet, I want to know what the regulations will be like, and I don't see an easy way to estimate this just from what I know. I would have to do research to figure this out. But I can estimate the size of the market -- maybe 100M people in the US who take drugs regularly, and lots of drugs cost well over $1 a day, so $100M/day, or $50bn/year. My business sense tells me that regulations are likely to be the main barrier to entry for competitors (there's so much incentive for the existing players to put up barriers that they've probably done it).

Let's do out the probabilities:

- Target: 10% chance of regulatory burden being surmountable by a startup

- 25% chance of me actually deciding to try and execute on the idea, given that the regulatory burden seems surmountable

- 5% chance of me succeeding at getting 1% of the estimated market ($500M/yr), given that I decide to execute on the idea

- 25% of the company is an estimate of what I will own if I succeed

So the expected value of getting this information appears to be at least $100,000, if it can actually establish that 10% probability. I can probably obtain this information for a lot cheaper than that, so I should go look up regulatory burdens of starting an online pharmaceutical company.

Obviously this analysis has a lot of flaws, but it still seems very useful.

Sunk cost fallacy and self-consistency: Noticing when you are making decisions based on sunk costs or a previous decision you want to be consistent with. Poker example: I was bluffing on a hand, someone raised me, I was tempted to reraise in order to be consistent with that bluff. Non-poker example: Driving down the street to the grocery store, I realize I want to go to the bank first, because I will have ice cream in the trunk on the way home. Even though it would be faster to go to the bank first, and I don't risk my ice cream melting, I am already going in the direction of the grocery store, and I don't want to turn around now.

That's most of what we've covered. It's really useful and applicable material. Even if you understand the theory based on reading, it seems like I've learned the material better by being forced to come up with examples on the fly.

Tuesday, 12 July 2011

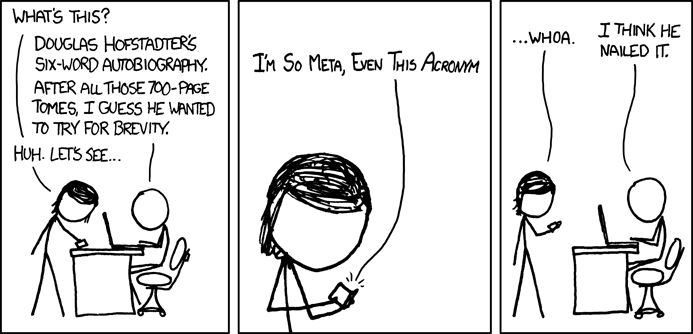

Metacamp Metasession, Meta Meta Meta

It was weekend, a week and a half ago. Michael Curzi (from the minicamp) came by. He played a few hands of Poker with us, which involved him never folding anything, and after awhiles I tired of it, and took him up on a bet that was higher than I felt comfortable with. As we played, he asked us what we thought of the camp, how things had been and how we felt about things.

It turned out we had a number of complaints, ranging from impatience with PowerPoints to insufficient agency. Those of us who were awake talked late into the night, devising more efficient ways to present material, more engaging forms of discussion, and more effective bonding exercises. Weeks of productivity training had well indoctrinated in us the value of small, immediately-available actions. "Next actions," we called them. We resolved to take actions to resolve our complaints, in the form of calling a meta-meeting to voice our thoughts and propose our ideas.

We thought hard about when to call the meeting. Jeremy checked and found the schedule empty for Tuesday afternoon's session (this would have been for the afternoon of July 5th), so I wrote a draft of an email inviting everyone to join us for a meta-meeting during, as opposed to after, Tuesday's afternoon session. Lincoln proposed scheduling for Tuesday evening, a less imposing time, but John favored occupying the session so that we could set a precedence of scheduling ourselves when there is nothing scheduled. I myself preferred afternoon, to take a position of authority in scheduling, and so that if the organizers already had something planned, they would be forced to interact with us as though we were organizers too. We took a vote and decided on Tuesday afternoon.

Immediately, there was trouble. There was already something scheduled for Tuesday afternoon. Even worse, Jasen did not get home until Tuesday night, and that was no good at all, because it was really important that such a relevant discussion have Jasen in it. Anna wrote back saying she was doing sessions on Tuesday, Thursday, and Friday. We rescheduled for Wednesday afternoon. By that time, Andrew would have been the only person still out of town. Unbeknownst to us, we displaced Anna's Wednesday session, but I guess it was all the better we thought there wasn't one, or I might have been too much worried about inconveniencing Anna to displace her session. On Tuesday, we worked out an outline of the general areas we intended to cover, and who would lead each part of the discussion.

Preparing for a meeting of any significance is quite terrifying. I was to open the meeting, I was to communicate a brief summary of why the meeting was called, and that it was important. I rehearsed several times what I was to say, but each time, what I'd rehearsed the previous time went into a black hole of never-was-and-never-had-been. 15:00 came and went, and we had, in addition to Jasen and Anna, a number of other instructors with us as well. Blake opened a shared Google document where we could take notes during the meeting, and several of us opened the document on our laptops. At ten minutes past the hour, even though we were still missing two people, we started the meeting.

After a brief opening introduction, John spoke about our current roles as approximately students taking classes. He recalled conversations with Jasen where Jasen had been eager to hear and receptive of his ideas. He then envisioned a camp where we, the participants, were more like coordinators ourselves, agents who altered the camp instead of passively receiving it.

Then, Blake led a discussion about different ways to discuss topics and possibly learn more about them. Many of the proposed changes involved more engaging activities, smaller group discussions, and fewer lecture-style presentations. It seemed that some presentations had been dull or basic, assuming less intelligence of us and thereby producing uninteresting or repetitive material. Some people felt that we should try to get what we can out of each session, but I felt that our time is sufficiently valuable that it's not worthwhile to spend three hours in an uninteresting session extracting what we can. It was said that simply talking with each other is an invaluable resource made available through us being thrown together. Lincoln proposed hand signs to signal impressions without interrupting the speaker. Someone had the idea that we keep a Google document open during sessions so we could put in our thoughts if we didn't get a chance to say them out loud. There was the concern that having laptops would distract people by providing internet, but others said it helped to be able to look things up on Wikipedia during classes. We took a vote, and it turns out that we find the internet an average of 4.36/10 useful to have during class. We also voted on the usefulness of learning business, and that turned out to be an average of 6.00/10. We resolved to fill out surveys after sessions as well in order to give more feedback about sessions, and Anna gave us a list of useful things to ask on surveys: the main idea of the class, the most surprising thing, and the most confusing thing.

Next, we voted on how comfortable each person felt with sharing critiques right then during the meta-meeting, and it averaged to 5.47/10. This was worrisome, because it showed that a significant portion of people were significantly uncomfortable with sharing their thoughts, or at least not entirely comfortable. We went around the circle and asked each person what was the largest obstacle to speaking freely, and the responses fell into several clusters: unwillingness to say negative (and possibly offensive) things, the pressure of speaking when put "on the spot," feeling like an outsider not directly involved, and lacking in confidence.

At this point, we took a few minutes to go into the Google document and write down things we wished to discuss. At the end of five minutes, we went through everyone's notes, one person's at a time. We discussed the idea of having pairwise conversations with each other in one-to-one conversations, and that was 7.42/10 popular. Julian wrote that things seemed to be more organized when everyone woke up and meditated together, so we resolved to enforce getting up and starting on time. Some people wanted to exercise in the mornings instead. We decided to try 10 minutes of exercise followed by 20 minutes of meditation, instead of the usual half hour of meditation. It was also proposed that people might choose not to attend sessions if they felt it was not relevant to them, and if there was something else they wanted to do. It was decided that everyone was to attend session on time, but that once there, individuals might present to Jasen for approval an argument as to why they are better off doing something else. Thomas wanted notes on each day's activities, so we decided on having two scribes take notes each day. The topic of cooking and dishes came up, and it seemed some people were less happy than others about how much dish-washing and cleaning they were doing, so we put up a dish-washing sign-up schedule. A recurrent theme was the preference for smaller group discussions where each person is more involved, so we divided people into four groups, to be reshuffled each week. In the afternoon, two of the groups have a two-hour "Anna session" while the other two groups take turns having a one-hour "Zak session," and in the evening, they switch. Of course, the "Anna-session" is not always together, nor is the "Zak session" always led by Zak, but it was a structure that produced different sizes of groups with different levels of involvement. We committed to holding more meta-feedback meetings.

We took notes of Next Actions, since it is always easy to talk about things and not do anything. In order to avoid the Bystander Effect, we assigned specific tasks to individual people: I was to schedule people to talk to each other, John was to draft checklists, Blake and Jasen to schedule more meta-meetings, Jasen, Peter, and Jeremy to wake people up in the mornings, and Thomas to assign people to scribe each day.

At this point, most of our decisions have been implemented. We get up together, exercise, meditate, and have our small-group sessions. We have scribing and conversation schedules and have been making much more use of Google documents. We fill out a short survey at the end of every session. We do more personal project and personal study than before. Things are a bit different. Are they better? Time will tell.

But I do feel that several significant things went definitively right in all of this.

The first is that we took something we thought and used it to alter the state of the world. To me, simply the act of calling a meeting and trying to change things identifies my fellow Megacamp participants as a group who, by some combination of ourselves and our influences on each other, take real actions. I feel it is an important step from merely talking about things to enacting them. In that step, we transcended our cast role as students, becoming neither passive commentators nor theoreticians, but causal agents.

The second is that things were conducted with a great deal of dignity and respect. Rather than feeling like us against organizers, it seemed that we were all pursuing a common goal, which was to make the camp maximally effective. Therefore, it was easy to listen to everyone's ideas, examine them, and decide on next actions, instead of the all-too-common status-fight of trying to seem intelligent and shooting others down. I felt that we took each other seriously, and every instructor took us seriously, so that in general we were good about not getting offended and looking for the most effective solutions.

A final thing was that we were able to commit to specific actions and then abide by them, because it is all too easy to make a resolution and then break it. If that happened, nothing would change at all. But we have held to our decisions, and that makes progress possible.

I am very proud to be among this group of peers and instructors. I feel that this issue was handled admirably, and that we worked reasonably and constructively to resolve our areas of discontent. Here looking back on it all, I respect everyone a great deal, both the instructors and the participants for their readiness to act and their resistance to becoming entrenched in well-defined roles (as in the Zimbardo experiment). I am eager to see where the next weeks take us, and I am confident that even should things go amiss, I am not trapped. In a Nomic sense, I feel that things are (and always will be) mutable, so long as there exists the initiative to change rules.

Instead of a line of lyric, I will conclude with this colorful, irritated passage from a colorful, irritated essay:

The young specialist in English Lit, having quoted me, went on to lecture me severely on the fact that in every century people have thought they understood the Universe at last, and in every century they were proven to be wrong. It follows that the one thing we can say about out modern "knowledge" is that it is wrong.

The young man then quoted with approval what Socrates had said on learning that the Delphic oracle had proclaimed him the wisest man in Greece. "If I am the wisest man," said Socrates, "it is because I alone know that I know nothing." The implication was that I was very foolish because I was under the impression I knew a great deal.

Alas, none of this was new to me. (There is very little that is new to me; I wish my corresponders would realize this.) This particular thesis was addressed to me a quarter of a century ago by John Campbell, who specialized in irritating me. He also told me that all theories are proven wrong in time.

My answer to him was, "John, when people thought the Earth was flat, they were wrong. When people thought the Earth was spherical, they were wrong. But if you think that thinking the Earth is spherical is just as wrong as thinking the Earth is flat, then your view is wronger than both of them put together."

--- Isaac Asimov, http://hermiene.net/essays-trans/relativity_of_wrong.html

Peace and happiness,

wobster109

Saturday, 9 July 2011

How to enjoy being wrong

Note: This is a draft of a post which, if it turns out to be useful, I intend to post to Less Wrong directly. For now, please correct the post and add your own personal experiences and thoughts.

Related to: Reasoning Isn't About Logic, It's About Arguing; It is OK to Publicly Make a Mistake and Change Your Mind.

Examples of being wrong

A year ago, in arguments or in thought, I would often:

- avoid criticizing my own thought processes or decisions when discussing why my startup failed

- overstate my expertise on a topic (how to design a program written in assembly language), then have to quickly justify a position and defend it based on limited knowledge and cached thoughts, rather than admitting "I don't know"

- defend a position (whether doing an MBA is worthwhile) based on the "common wisdom" of a group I identify with, without any actual knowledge, or having thought through it at all

- defend a position (whether a piece of artwork was good or bad) because of a desire for internal consistency (I argued it was good once, so felt I had to justify that position)

- defend a political or philosophical position (libertarianism) which seemed attractive, based on cached thoughts rather than actual reasoning

- defend a position ("cashiers like it when I fish for coins to make a round amount of change"), hear a very convincing argument for its opposite ("it takes up their time, other customers are waiting, and they're better at making change than you"), but continue arguing for the original position. In this scenario, I actually updated -- thereafter, I didn't fish for coins in my wallet anymore -- but still didn't admit it in the original argument.

- provide evidence for a proposition ("I am getting better at poker") where I actually thought it was just luck, but wanted to believe the proposition

- when someone asked "why did you [do a weird action]?", I would regularly attempt to justify the action in terms of reasons that "made logical sense", rather than admitting that I didn't know why I made a choice, or examining myself to find out why.

We rationalize because we don't like admitting we're wrong. (Is this obvious? Do I need to cite it?)

Over the last year, I've self-modified to mostly not mind being wrong, and in some cases even enjoy being wrong. I still often start to rationalize, and in some cases get partway through the thought, before noticing the opportunity to correct the error. But when I notice that opportunity, I take it, and get a flood of positive feedback and self-satisfaction as I update my models.

How I learned how to do this

The fishing-for-coins example above was one which stood out to me retrospectively. Before I read any Less Wrong, I recognized it as an instance where I had updated my policy. But even after I updated, I had a negative affect about the argument because I remembered being wrong, and I wasn't introspective enough to notice and examine the negative affect.

I still believed that you should try to "win" an argument.

Eventually I came across these Sequences posts: The Bottom Line and Rationalization. I recognized them as making an important point; they intuitively seemed like they would explain very much of my own past behavior in arguments. Cognitively, I began to understand that the purpose of an argument was to learn, not to win. But I continued to rationalize in most of the actual arguments I was having, because I didn't know how to recognize rationalization "live".

When applying to the Rationality Mega-Camp (Boot Camp), one of the questions on the application was to give an instance where you changed a policy. I came up with the fishing-for-coins example, and this time, I had positive feelings when remembering the instance, because of that cognitive update since reading the Sequences. I think this positive affect was me recognizing the pattern of rationalization, and understanding that it was good that I recognized it.

Due to the positive affect, I thought about the fishing-for-coins example some more, and imagined myself into that situation, specifically imagining the desire to rationalize even after my friend gave me that really compelling argument.

Now, I knew what rationalization felt like.

At the Rationality Mega-Camp, one of the sessions was about noticing rationalization in an argument. We practiced actually rationalizing a few positions, then admitting we were rationalizing and actually coming to the right answer. This exercise felt somewhat artificial, but at the very least, it set up a social environment where people will applaud you for recognizing that you were rationalizing, and will sometimes call you out on it. Now, about once a day, I notice that I avoid getting into an argument where I don't have much information, and I notice active rationalization about once every two days.

The other thing we practiced is naming causes, not justifications. We attempt to distinguish between the causes of an action -- why you *really* do something -- and myriad justifications / rationalizations of the action, which are reasons you come up with after the fact for why it made logical sense to do a thing.

How you can learn to recognize rationalization, and love to be wrong

These steps are based mostly on my personal experience. I don't know for sure that they'll work, but I suspect they will.

You'll do this with a close friend or significant other. Ideally they're someone with whom you have had lots of frustrating arguments. It would be even better if it's someone who also wants to learn this stuff too.

First, read these Sequences: The Bottom Line and Rationalization. Be convinced that being right is desirable, and that coming up with post hoc reasons for something to be true is the opposite of being right: it's seeming right while being wrong, it's lying to yourself and deceiving others. It is very bad. (If you're not convinced of these points, I don't think I can help you any further.)

Next, take 10 minutes to write down memories of arguments you had with people where you didn't come to an agreement by the end. If possible, think of at least one argument with this friend, and at least one argument with someone else.

Next, take 10 minutes to write down instances from your personal life where you think you were probably rationalizing. (You can use the above arguments as examples of this, or come up with new examples.) Imagine these instances in as much explicit detail as possible.

Next, tell your friend about one of these instances. Describe how you were rationalizing, specifically what arguments you were using and why they were post-hoc justifications. Have your friend give you a hug, or high-five or something, to give a positive affect to the situation.

This step is optional, but it seems like it will often help: actually work out the true causes of your behavior, and admit them to your friend. It's OK to admit to status-seeking behavior, or self-serving behavior. Remember, this is your close friend and they've agreed to do the exercise with you. They will think more of you after you admit your true causes, because it will benefit them for you to be more introspective. Again with the hug or high-five.

Next, rehearse these statements, and apply them to your daily life:

- "When I notice I'm about to get into an argument, remind myself about rationalizing."

- "When I notice illogical behavior in myself, figure out its true causes."

- "When someone else states a position, ask myself if they might be rationalizing."

- "When someone else seems upset in an argument, ask myself if they might be rationalizing."

- "When I notice rationalization in myself, say 'I was rationalizing' out loud."

- "When I notice I've updated, say 'I was wrong' out loud."

- "When I say 'I was rationalizing', ask for a high five or give myself a high five."

- "When I say 'I was wrong', ask for a high five or give myself a high five."

Regarding the high five: that's to give positive affect, for conditioning purposes. I am not sure about whether this step will work. I didn't do it and I still learned it, but I had very strong inherent desire to be right rather than to seem right and be wrong. If you don't have that desire, my hypothesis is that the high five / social conditioning will help to instill that desire.

And let me know in the comments how it goes.

Thursday, 7 July 2011

Monday, 4 July 2011

Internal Family Systems

Note: I (Lincoln) am posting here now instead of at my personal blog so that there can be comments attached to the post.

Another self-improvement technique we've been learning is IFS, which is another bullshit acronym. It stands for Internal Family Systems, but this has nothing to do with families. I guess it is internal to one's brain. Systems is a fluff word.

The elephant/rider metaphor is extended to indicate the presence of multiple elephants, all pulling in different directions. IFS as a procedure has you pinpoint the behavior of a particular elephant, in order to better model it, understand its behavior and drives, and possibly change it.

IFS terminology for the elephants is simply "a part [of you]". I will continue to call them elephants.

The procedure works like this: you identify an elephant that is causing you to behave in a certain way. Maybe it's annoying, maybe it makes you upset or maybe it is helpful. Then you imagine it in your brain, personify it, make friends with it and negotiate with it. All the elephats are considered to have good intentions -- they're providing you with useful data, at the very least, or averting you from pain, or whatever. The IFS process is supposed to help you align the elephants better towards achieving your goals.

If you're going to do IFS on yourself or on your friend, here are some questions you can ask:

At this point, if you've been thoughtfully and honestly answering the questions, you should have a better model of the elephant and why it's behaving in that way. If you see a solution, try to make an agreement with the elephant, but if not, you can still gain well-being by having modeled it in this way, and maybe it won't bother you as much in the future. Or perhaps you will gain its trust.

Anyway, the procedure is pretty cute. I've used it on some friends, who reported some success after the fact, so I am inclined to believe it has some merit. At the very least, it seems generally healthy to treat your different emotions and drives as autonomous entities, as opposed to trying to suppress them. Not much luck on applying it to myself, but nothing which seems well suited for application either -- I mainly tried procrastination, but it seems better suited to emotional issues and anxieties.

Another self-improvement technique we've been learning is IFS, which is another bullshit acronym. It stands for Internal Family Systems, but this has nothing to do with families. I guess it is internal to one's brain. Systems is a fluff word.

The elephant/rider metaphor is extended to indicate the presence of multiple elephants, all pulling in different directions. IFS as a procedure has you pinpoint the behavior of a particular elephant, in order to better model it, understand its behavior and drives, and possibly change it.

IFS terminology for the elephants is simply "a part [of you]". I will continue to call them elephants.

The procedure works like this: you identify an elephant that is causing you to behave in a certain way. Maybe it's annoying, maybe it makes you upset or maybe it is helpful. Then you imagine it in your brain, personify it, make friends with it and negotiate with it. All the elephats are considered to have good intentions -- they're providing you with useful data, at the very least, or averting you from pain, or whatever. The IFS process is supposed to help you align the elephants better towards achieving your goals.

If you're going to do IFS on yourself or on your friend, here are some questions you can ask:

- Come up with an elephant to talk to. Think of something you keep doing and regretting, or a habit you'd like to change.

- Think of a specific instance where you engaged in this behavior or thought or emotional pattern.

- Imagine it in as much concrete detail as possible.

- How would you feel about someone else engaging in this behavior or thought or emotional pattern at this time? Your goal is to feel curious. If you're not feeling curious, ask that feeling or concern to step aside so that you can be curious about it.

- Now, regarding the elephant directly:

- Personify it. What does it look like? Does it have a name? How do you experience it physically? (e.g., a prickling in the back of the neck)

- How much does it trust you?

- What is it saying?

- What feelings, thoughts, and behaviors does it produce?

- When is it active?

- What is it trying to accomplish?

- How long has it been around?

- What data is it giving you?

- Why are you grateful for this data?

- What is it afraid of?

At this point, if you've been thoughtfully and honestly answering the questions, you should have a better model of the elephant and why it's behaving in that way. If you see a solution, try to make an agreement with the elephant, but if not, you can still gain well-being by having modeled it in this way, and maybe it won't bother you as much in the future. Or perhaps you will gain its trust.

Anyway, the procedure is pretty cute. I've used it on some friends, who reported some success after the fact, so I am inclined to believe it has some merit. At the very least, it seems generally healthy to treat your different emotions and drives as autonomous entities, as opposed to trying to suppress them. Not much luck on applying it to myself, but nothing which seems well suited for application either -- I mainly tried procrastination, but it seems better suited to emotional issues and anxieties.

Friday, 1 July 2011

Could you Google. . .

". . . platypus penises, please?"

--- John

--- John

Subscribe to:

Comments (Atom)